I was working in a migration project where we migrated SQL Server 2000 to SQL Server 2008 R2. Everything went fine except one SSIS package. It’s a simple package where we are importing data from a csv file. This csv file is created from Unix environment with TAB as column delimiter and CR\LF as row delimiter.The source file might have values for all the columns or it won’t have value for one or multiple columns. It worked perfectly fine in SQL Server 2000 however when we migrated to SQL Server 2008 R2 the behavior is totally changed. When you execute the package it will take data from the next rows for the missing column values.

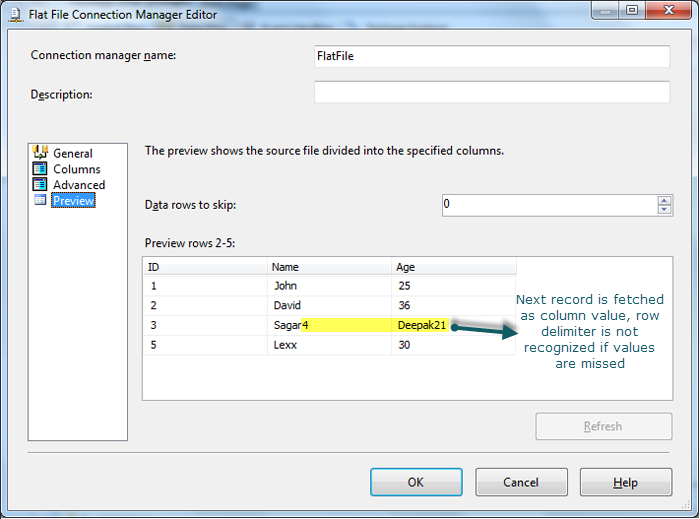

Let me explain the situation with an example. I have a flat file with 3 columns as shown below

ID Name Age

1 John 25

2 David 36

3 Sagar

4 Deepak 21

5 Lexx 30

When I execute the package I expect a blank as age for Sagar and this is the behavior in SQL 2000, however when I execute the package , it imported the data as below

As we didn’t do any changes to the package or to the db, we were wondering why this is happening. I started working on this and found out that this behavior is changed from SQL 2005 onwards. It’s Ragged right format now and that’s the reason its pulling data from next row. This issue is already raised in Microsoft Connect in the year 2006 so no hope for expecting a workaround from MS for this release. Good news is that this behavior is changed in SQL Server 2012 and it’s working fine in SQL 2012. Problem for me is that I don’t have any control on the source to change the delimiters, so I started researching for a workaround for this package to work properly with the same data.

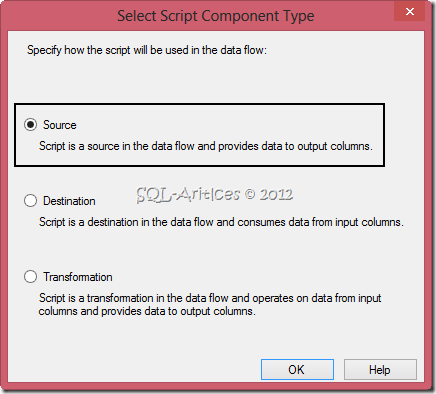

I was looking all the components in BIDS to make use of it to resolve the issue. I planned to use “Script Component” (Script Transformation Editor) and thought of writing script to process the source file and then passing it to SQL Server. In this method I’m going to write the valid data to actual destination table and then the invalid data to a dummy table to process manually. One problem with this method is that SSIS package has to process the source file line by line.

To demonstrate I used a new package,

- Drag “Data Flow Task” and get into it.

- Now add “Script Component Task” and then select “Source” option button as this has to serve as source to the tables

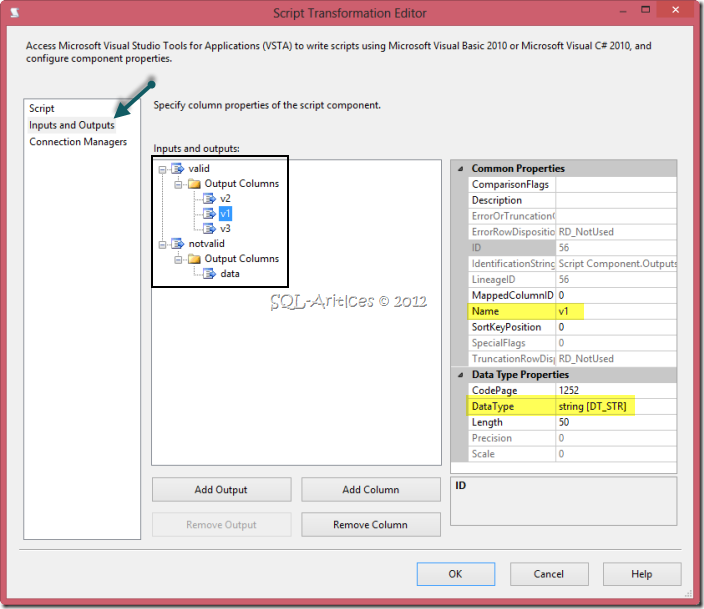

- Now double click on the script component task and go to “Inputs and Outputs” tab

- You already have an output hierarchy as “Output 0”, now rename it as “Valid” as shown below. To this destination we are going to pass all the valid data. Now add 3 columns to this output as our destination table (actual table) has 3 columns and choose a name & data type accordingly. (In this case I named column as V1,V2 & V3 and string for all the columns)

- Now add one more output hierarchy and add a single column to it as we are going to dump the entire line for the invalid data. Later You can process it manually or using script

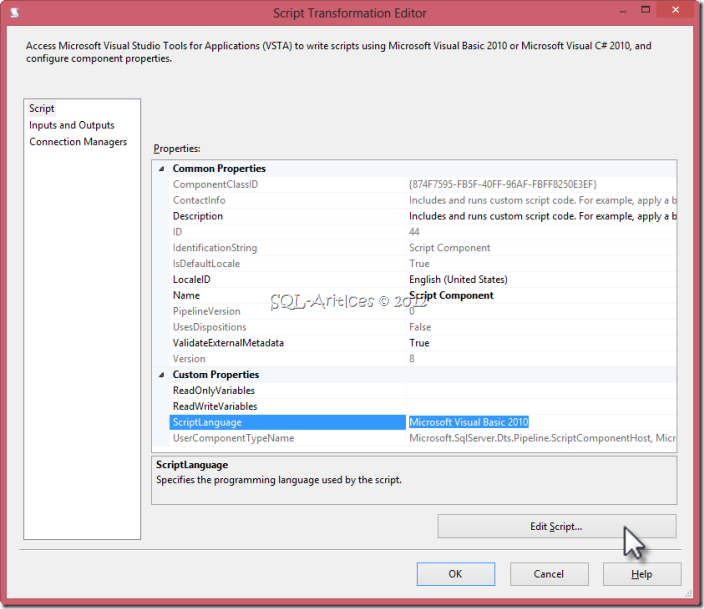

Now go to “Script” tab and change the script language to “Microsoft Visual Basic 2008” or “Microsoft Visual Basic 2010” and then click on “Edit Script” button, visual studio editor window will pop up

Add the below line to Public Class ScriptMain section

Dim FileReader As System.IO.StreamReader

Add the below line to Public Overrides Sub PreExecute() section

FileReader = New System.IO.StreamReader("C:\Temp\data.txt") 'Point your flat file here

Add the below line to Public Overrides Sub PostExecute() section

FileReader.Close()

Now add the below piece of code to Public Overrides Sub CreateNewOutputRows()

Dim LI As Integer = 0 While (Not FileReader.EndOfStream) Dim linedata As String = FileReader.ReadLine() 'Passing line data to linedata string If (LI <> 0) Then 'Ignoring header row Dim Array As String() = linedata.Split(Convert.ToChar(vbTab)) 'Split column values based on column delimiter, in this case its TAB If (Array.Length = 3) Then 'Since my base table has 3 columns, if the line doesnt have 3 values then pass the data to dummy table validBuffer.AddRow() validBuffer.v1 = Array(0) validBuffer.v2 = Array(1) validBuffer.v3 = Array(2) Else notvalidBuffer.AddRow() notvalidBuffer.data = linedata.ToString End If End If LI = LI + 1 'Goto Next line End While

Now the entire piece of code is below.

#Region "Imports"

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Pipeline.Wrapper

Imports Microsoft.SqlServer.Dts.Runtime.Wrapper

#End Region

<Microsoft.SqlServer.Dts.Pipeline.SSISScriptComponentEntryPointAttribute> _

<CLSCompliant(False)> _

Public Class ScriptMain

Inherits UserComponent

Dim FileReader As System.IO.StreamReader

Public Overrides Sub PreExecute()

MyBase.PreExecute()

FileReader= New System.IO.StreamReader("C:\Temp\data.txt")

End Sub

Public Overrides Sub PostExecute()

MyBase.PostExecute()

FileReader.Close()

End Sub

Public Overrides Sub CreateNewOutputRows()

Dim LI As Integer = 0

While (Not FileReader.EndOfStream)

Dim linedata As String = FileReader.ReadLine()

If (LI <> 0) Then

Dim Array As String() = linedata.Split(Convert.ToChar(vbTab))

If (Array.Length = 3) Then

validBuffer.AddRow()

validBuffer.v1 = Array(0)

validBuffer.v2 = Array(1)

validBuffer.v3 = Array(2)

Else

notvalidBuffer.AddRow()

notvalidBuffer.data = linedata.ToString

End If

End If

LI = LI + 1

End While

End Sub

End Class

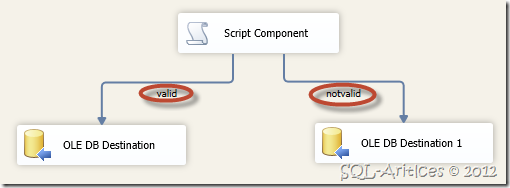

That’s it we are done with the split condition. Now we have to point the result set to appropriate tables. To accomplish this add two “OLE DB Destination” one for the actual destination table and the other one for the dummy table as shown below. Once done execute the package

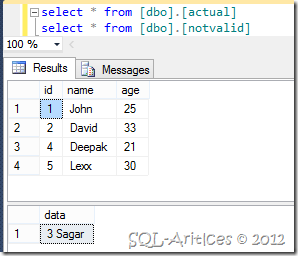

Now if we retrieve data from actual destination & dummy tables, we will get values as below

As you can see the proper data is stored in actual destination table and improper data is stored in the dummy table. Now you can either process the invalid data manually or you can write a script to automatically fetch the column values from dummy table to actual destination table. In my case all the records should have proper data so we decided to process it manually.

Leave a Reply